Authoring Interfaces

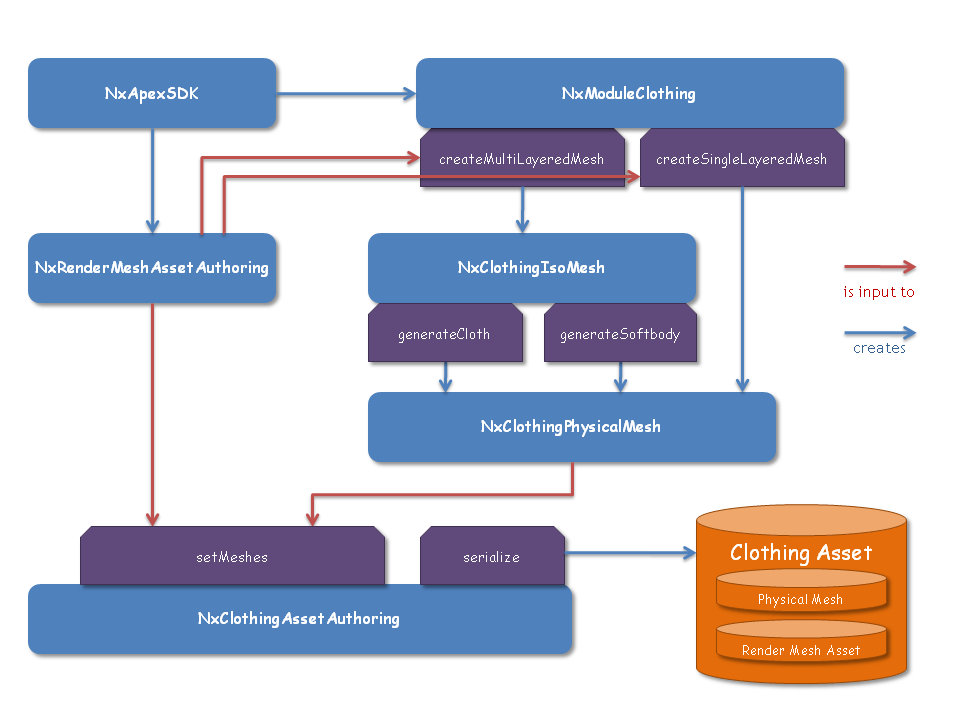

Different tools are provided to create and author clothing assets that can be loaded at run time. There are plug-ins to the DCC tools Autodesk Maya and 3DS Max and also the standalone ClothingTool that shows how to implement the authoring interface of the Clothing Module. The diagram below shows the work-flow to create a clothing asset. See the clothing module documentation for a more detailed description. This chapter explains the work-flow to create a Clothing Asset taking the ClothingTool as an example.

Initialization

Like for the run time part first an PxPhysics object, a reference to the cooking library, and the APEX objects ApexSDK and ModuleClothing have to be created as a starting point:

#include <NvParamUtils.h>

// init PhysX

PxPhysics* m_physxSDK;

PxFoundation* m_foundation;

m_foundation = PxCreateFoundation(PX_PHYSICS_VERSION, ...);

m_physxSDK = PxCreatePhysics( ... );

PxInitExtensions(*m_physxSDK);

// init cooking

PxCookingParams params;

PxCooking* m_cooking;

m_cooking = PxCreateCooking(PX_PHYSICS_VERSION, m_physicsSDK->getFoundation(), params);

// init apex sdk

nvidia::apex::ApexSDKDesc apexSdkDesc;

// Let Apex know about our PhysX SDK and cooking library

apexSdkDesc.physXSDK = m_physxSDK;

apexSdkDesc.cooking = m_cooking;

// The output stream is used to report any errors back to the user

apexSdkDesc.outputStream = mErrorReport;

// Our custom render resource manager

apexSdkDesc.renderResourceManager = mRenderResourceManager;

// Our custom named resource handler (NRP)

apexSdkDesc.resourceCallback = mResourceCallback;

// Apex needs an allocator and error stream.

// By default it uses those of the PhysX SDK.

apexSdkDesc.allocator = mApexAllocator;

// Finally, create the Apex SDK

ApexCreateError errorCode;

mApexSDK = nvidia::apex::CreateApexSDK(apexSdkDesc, &errorCode);

// init clothing module

mApexClothingModule = static_cast<nvidia::apex::ModuleClothing*>(mApexSDK->createModule("Clothing"));

if (mApexClothingModule != NULL)

{

NvParameterized::Interface* moduleDesc = mApexClothingModule->getDefaultModuleDesc();

// This optimization is only needed for run time, we turn it off for authoring

NvParameterized::setParamU32(*moduleDesc, "maxUnusedPhysXResources", 0);

mApexClothingModule->init(moduleDesc);

}

// init conversion module

// this module is only needed to convert older parameterized assets to the latest one

mApexClothingLegacyModule = static_cast<nvidia::apex::ModuleClothing*>(mApexSDK->createModule("Clothing_Legacy"));

Graphical Mesh

APEX uses the RenderMeshAsset as a container for graphics meshes. To provide the graphics mesh of the part of the character that is going to be simulated, an RenderMeshAssetAuthoring class has to be created. For that a descriptor has to be filled:

nvidia::apex::RenderMeshAssetAuthoring::MeshDesc meshDesc;

// a mesh desc must contain one or more submeshes

nvidia::apex::RenderMeshAssetAuthoring::SubmeshDesc submeshDesc;

meshDesc.m_numSubmeshes = 1;

meshDesc.m_submeshes = &submeshDesc;

The mesh has one submesh for each different material name it uses:

// fill out the submeshDesc

submeshDesc.m_numVertices = mVertices.size();

// each submeshDesc must contain one or more vertex buffers

nvidia::apex::RenderMeshAssetAuthoring::VertexBuffer vertexBufferDesc;

submeshDesc.m_materialName = "Submesh Name";

submeshDesc.m_vertexBuffers = &vertexBufferDesc;

submeshDesc.m_numVertexBuffers = 1;

submeshDesc.m_primitive = nvidia::apex::RenderMeshAssetAuthoring::Primitive::TRIANGLE_LIST;

submeshDesc.m_indexType = nvidia::apex::RenderMeshAssetAuthoring::IndexType::UINT;

submeshDesc.m_vertexIndices = &mIndices[0];

submeshDesc.m_numIndices = mIndices.size();

submeshDesc.m_firstVertex = 0;

// we don't use parts for clothing meshes

submeshDesc.m_partIndices = NULL;

submeshDesc.m_numParts = 0;

// set the culling mode

submeshDesc.m_cullMode = nvidia::apex::RenderCullMode::CLOCKWISE;

A vertex buffer stores all vertex related data for that particular submesh:

// add position

vertexBufferDesc.setSemanticData( nvidia::apex::RenderVertexSemantic::POSITION, // the semantic

&mVertices[0], // the raw data pointer

sizeof(physx::PxVec3), // the raw data stride

nvidia::apex::RenderDataFormat::FLOAT3); // the raw data format

// [...] similar for normals/tangents/bitangents

// add texcoords

vertexBufferDesc.setSemanticData( nvidia::apex::RenderVertexSemantic::TEXCOORD0,

&mTexCoords[0],

sizeof(nvidia::apex::VertexUV),

nvidia::apex::RenderDataFormat::FLOAT2);

// add bones

vertexBufferDesc.setSemanticData( nvidia::apex::RenderVertexSemantic::BONE_INDEX,

&mBoneIndices[0],

sizeof(PxU16) * 4,

nvidia::apex::RenderDataFormat::USHORT4);

vertexBufferDesc.setSemanticData( nvidia::apex::RenderVertexSemantic::BONE_WEIGHT,

&mBoneWeights[0],

sizeof(physx::PxF32) * 4,

nvidia::apex::RenderDataFormat::FLOAT4);

Add custom buffers to add painting information.

Here the buffers MAX_DISTANCE, COLLISION_SPHERE_DISTANCE, COLLISION_SPHERE_RADIUS and USED_FOR_PHYSICS are added. It is important that the strings that define the custom buffer match exactly these names:

nvidia::apex::RenderSemanticData customBufferSemanticData[4];

// this is for max distance

customBufferSemanticData[0].data = &mMaxDistance[0];

customBufferSemanticData[0].stride = sizeof(float);

customBufferSemanticData[0].format = nvidia::apex::RenderDataFormat::FLOAT1;

customBufferSemanticData[0].ident = "MAX_DISTANCE";

// these two are for backstop

customBufferSemanticData[1].data = &mCollisionSphereDistance[0];

customBufferSemanticData[1].stride = sizeof(float);

customBufferSemanticData[1].format = nvidia::apex::RenderDataFormat::FLOAT1;

customBufferSemanticData[1].ident = "COLLISION_SPHERE_DISTANCE";

customBufferSemanticData[2].data = &mCollisionSphereRadius[0];

customBufferSemanticData[2].stride = sizeof(float);

customBufferSemanticData[2].format = nvidia::apex::RenderDataFormat::FLOAT1;

customBufferSemanticData[2].ident = "COLLISION_SPHERE_RADIUS";

// this one is also known as latch-to-nearest

customBufferSemanticData[3].data = &mUsedForPhysics[0];

customBufferSemanticData[3].stride = sizeof(unsigned char);

customBufferSemanticData[3].format = nvidia::apex::RenderDataFormat::UBYTE1;

customBufferSemanticData[3].ident = "USED_FOR_PHYSICS";

// can add multiple custom semantics to one vertex buffer

vertexBufferDesc.setCustomSemanticData(customBufferSemanticData, 4);

finally, create the render mesh asset authoring:

renderMeshAsset = static_cast<nvidia::apex::RenderMeshAssetAuthoring*>(_mApexSDK->createAssetAuthoring(RENDER_MESH_AUTHORING_TYPE_NAME));

// and apply the descriptor

renderMeshAsset->createRenderMesh(meshDesc, true);

The last parameter in createRenderMesh() creates another custom buffer that contains all the original indices. This allows the application to track where the vertices have been re-shuffled.

Physics Mesh

Now everything is ready for the essential part of the clothing authoring pipeline. A physics mesh has to be provided that is simulated at run time. This simulation mesh can be derived from the graphics data in different approaches or a custom mesh can be provided.

Singlelayer Cloth

With the singlelayer approach the graphics mesh is directly copied and eventually adapted to suit the purpose of cloth simulation:

mApexPhysicalMesh = mModuleClothing->createSingleLayeredMesh(

mApexRenderMeshAssetAuthoring, // the RenderMeshAssetAuthoring

mSubdivision, // the amount of subdivision

mWeldVertices, // weld vertices together

mCloseHoles, // close holes in the physical mesh

&progress // Progress listener

);

The createSingleLayeredMesh call returns the pointer to an ClothingPhysicalMesh object. The parameters define if subdivision, vertex merging and hole-closing is supposed to be applied. Also the on/off channel on the graphics mesh (it is stored in the RenderMeshAssetAuthoring object) is considered to choose a subset of the graphics mesh.

The physics mesh provides a simplification algorithm to reduce the number of mesh vertices and triangles:

mApexPhysicalMesh->simplify(subdivSize, maxSteps, -1, &progress);

There is a special case called immediate clothing approach which uses the same mesh for physics as well as for graphics. This requires that the mSubdivision parameter (in createSingleLayeredMesh) is set to 0 and no simplification was used. This has the advantage that mesh-mesh skinning is not necessary.

Multilayer Clothing Cloth

The multilayer approach first creates an intermediate isosurface from the graphics mesh:

mApexIsoMesh = mModuleClothing->createMultiLayeredMesh(

mApexRenderMeshAssetAuthorings, // the RenderMeshAssetAuthoring

mSubdivision, // the amount of subdivision

mRemoveInternalMeshes, // Only keep the largest isomesh

&progress // Progress listener

);

The iso-mesh provides a simplify method. It is highly recommended to simplify the very dense iso meshes. It will improve the quality and speed of the subsequent algorithmic steps:

mApexIsoMesh->simplify(mIsoSimplificationSubdiv, SIMPLIFICATOR_MAX_STEPS, -1, &progress);

Softbody

With the softbody approach a tetrahedral mesh is generated from the iso-mesh:

mApexPhysicalMesh = mApexIsoMesh->generateSoftbodyMesh(mInternalsSubdiv, &progress);

Cloth

The multilayer clothing approach with cloth simulation uses the intermediate iso-surface to derive a triangle mesh from the graphics mesh. The iso-surface is contracted to transform the volumetric surface to a flat triangle mesh:

PxReal volume = mApexIsoMesh->contract(

mIsoContractionSteps, // contraction iterations

0.01f, // abortion ratio

true, // edge expansion after each iteration

&progress // progress listener

);

The physics cloth mesh is then generated from the contracted iso-surface:

mApexPhysicalMesh = mApexIsoMesh->generateClothMesh(

20, // remove bubbles up to this size

&progress // progress listener

);

When doing so you can end up with a mesh that has bubbles, small pieces where the mesh has two layers, which is bad for the cloth simulation. Such bubbles can be detected and will be removed if its number of faces is smaller or equal to the number provided in the function call.

After that the physical mesh can be simplified again:

mApexPhysicalMesh->simplify(subdivSize, maxSteps, -1, &progress);

Custom Physics Mesh

The ClothingTool does not implement the creation of a custom physics mesh. However the Clothing Module provides means to do it, and it is possible using the Maya plug-in.

There is an interface in the ModuleClothing class to create an empty ClothingPhysicalMesh:

mApexPhysicalMesh = mModuleClothing->createEmptyPhysicalMesh();

Then the cloth or softbody mesh can be created by providing a vertex and an index buffer to that physical mesh:

mApexPhysicalMesh->setGeometry(

isTetraMesh,

numVertices,

vertexByteStride,

vertices,

numIndices,

indexByteStride,

indices

);

Creating the Clothing Asset Authoring Object

The ClothingAsset is the container that combines graphical and physical meshes as well as additional information together. The -Authoring version of this class is needed to actually carry out the combination:

// Any unique name is ok

mApexClothingAssetAuthoring= mModuleClothing->createAssetAuthoring(CLOTHING_AUTHORING_TYPE_NAME, "The authoring asset");

Binding the Graphical and the Physical Mesh

Having created a graphical mesh and physical mesh, they can now be given to the clothing asset authoring object:

mApexClothingAssetAuthoring->setMeshes(

lod, // the graphical lod number of the graphical/physical mesh pair

mApexRenderMeshAssetAuthoring, // the RenderMeshAssetAuthoring

mApexPhysicalMesh, // the ClothingPhysicalMesh

numPhysicalLods, // size of the array of max distances

physicalLodValues, // an array of max distances to chop up the physical mesh

&progress // progress listener

);

At that point the mapping between the physics and the graphics mesh is created. Also the painting channels are interpolated from the graphical mesh onto the physical mesh.

The meshes are provided together with an LoD value. At run time the game engine can ask the asset to be rendered and simulated with the specified LoD.

In addition to that there is a physical LoD. Optionally a list of values can be provided that specify how much the Max Distances are reduced for different physical LoDs. This specifies which triangles are simulated based on the Max Distance painting of the asset.

Adding Information about Bones

The asset also needs some information about the bones that are used to modify this particular asset. This includes a name, the bind pose and a parentIndex.

mApexClothingAssetAuthoring->setBoneInfo(boneIndex, boneName, bonePose, boneParent);

Additionally, a collision representation can be added to each bone as well.

Note

All the information has to be already in the bone pose space!

// clear all bone collision

mApexClothingAssetAuthoring->clearAllBoneActors();

// a bone can only have either convex collision or capsule collision

mApexClothingAssetAuthoring->addBoneConvex(boneIndex, vertices, numVertices);

// a capsule can have a transform relative to the bone pose

mApexClothingAssetAuthoring->addBoneCapsule(boneIndex, capsuleRadius, capsuleHeight, localPose);

Adding Misc Parameters

Some of the parameters for the Cloth and Softbody that are not already in the ClothingMaterial (such as thickness, self collision thickness, self collision flag, untangling flag) are given to the asset. There is a collection of methods called ClothingAssetAuthoring::setSimulation*(). These values will be constant for the asset and all its actors.

Scaling at Export

To export a parameterized object with scale, a copy of the ClothingAssetAuthoring has to be made and then scaled before serialization:

void serializeWithScale(float scale)

{

NvParameterized::Interface* copyFrom = mClothingAssetAuthoring->getNvParameterized();

NvParameterized::Interface* copyTo = mApexSdk->getParameterizedTraits()->createNvParameterized(copyFrom->className());

PX_ASSERT(copyFrom && copyTo);

copyTo->copy(copyFrom);

nvidia::apex::ClothingAssetAuthoring* tempObject = static_cast<nvidia::apex::ClothingAssetAuthoring*>(mApexSdk->createAssetAuthoring(copyTo, "tempObject"));

physx::PxMat44 id = physx::PxMat44::getIdentity();

tempObject->applyTransformation(id, scale, true, true);

serialize(tempObject->getNvParameterized());

tempObject->release();

}

Saving & Loading the Asset

The clothing asset authoring object is now completely set up and ready to be serialized. There are two ways of serializing an asset. The first

The parameterized way

Every Asset implements the getNvParameterized() method. This will return an object with full reflection. It’s up to the game engine how to serialize this to memory or disc. Sample implementations are provided using the NvParameterized::Serializer class:

bool saveParameterized(const char* filename, ClothingAsset* asset)

{

NvParameterized::Interface* paramObject = asset->getNvParameterized();

PX_ASSERT(paramObject != NULL);

NvParameterized::Serializer::SerializeType serializerType = NvParameterized::Serializer::NST_LAST;

const char* ext = getExtension(filename);

if (!strcmp(ext, "apx"))

serializerType = NvParameterized::Serializer::NST_XML;

else if (!strcmp(ext, "apb"))

serializerType = NvParameterized::Serializer::NST_BINARY;

PX_ASSERT(serializerType != NvParameterized::Serializer::NST_LAST)

physx::PxFileBuf* fileBuffer = mApexSDK->createStream(filename, physx::PxFileBuf::OPEN_WRITE_ONLY);

if (fileBuffer != NULL)

{

if (fileBuffer->isOpen())

{

NvParameterized::Serializer* serializer = mApexSDK->createSerializer(serType);

NvParameterized::Serializer::ErrorType serError = NvParameterized::Serializer::ERROR_NONE;

serError = serializer->serialize(*fileBuffer, paramObject, 1);

PX_ASSERT(serError == NvParameterized::Serializer::ERROR_NONE);

}

fileBuffer->release();

fileBuffer = NULL;

}

}

Vice versa, the game engine has to load the parameterized object prior to create a clothing asset. This is the same code as for creating run time assets. The only difference is to create an authoring asset instead:

nvidia::apex::AssetAuthoring* loadParameterized(const char* fullpath)

{

nvidia::apex::AssetAuthoring* result = NULL;

NvParameterized::Serializer::DeserializedData deserializedData;

// A FileBuffer needs to be created.

// This can also be a customized subclass of physx::PxFileBuf

physx::PxFileBuf* fileBuf = mApexSDK->createStream(fullpath, physx::PxFileBuf::OPEN_READ_ONLY);

if (fileBuf != NULL)

{

if (fileBuf->isOpen())

{

char peekData[32];

stream->peek(peekData, 32);

// The returns either Serializer::NST_BINARY or Serializer::NST_XML

NvParameterized::Serializer::SerializeType serType = mApexSDK->getSerializeType(peekData, 32);

NvParameterized::Serializer* serializer = mApexSDK->createSerializer(serType);

NvParameterized::Serializer::ErrorType serError = NvParameterized::Serializer::ERROR_NONE;

serError = serializer->deserialize(*fileBuf, deserializedData);

// do some error checking here

if (serError != NvParameterized::Serializer::ERROR_NONE)

processError(serError);

serializer->release();

}

fileBuf->release();

}

// Now deserializedData contains one or more NvParameterized objects.

for (unsigned int i = 0; i < deserializedData.size(); i++)

{

NvParameterized::Interface* data = deserializedData[i];

printf("Creating Asset of type %s\n", data->className());

if (result == NULL)

{

result = mApexSDK->createAssetAuthoring(data, "some unique name");

}

else

{

// we have to handle files with multiple assets differently, for now, let's get rid of it

deserializedData[i]->destroy();

}

}

return result;

}

Progress Listener

A lot of the functions in the authoring pipeline take some processing time. They provide a way to show a progress bar during the calculation. The nvidia::apex::IProgressListener interface can be implemented to get callbacks that report the current processing status.

It is perfectly valid to pass NULL for the progress listener:

MyProgressListener progress;

mApexClothingAssetAuthoring->setMeshes( /* all the params */ , &progress);