This whitepaper describes how developers of speech-enabled applications can use Microsoft's Confusability.exe tool to detect phrases in grammars that may be confused with each other when processed by a Microsoft speech recognition engine.

Confusable phrases in grammars may cause misrecognition of speech input to an application and degrade the experience of its users. You will find discussion of issues in grammar development that the Confusability tool can and cannot help you to solve, in addition to guidance about how to leverage the results of its analysis to optimize the speech recognition experience for end-users.

Intended for the developer dealing with speech and grammar development, this whitepaper answers the following questions about the Confusability.exe tool:

"What does the tool do?"

"At what stage of grammar development should I use it?"

"How do I use the tool?"

"How do I apply the results?"

Part I: "What does the tool do?"

Part I: "What does the tool do?"

Confusability.exe analyzes a set of one or more grammars for phrases that could potentially be misrecognized for other phrases in the grammar(s). That is, the tool detects the risk that one phrase may be falsely accepted for another phrase.

What do we mean by "confusable" phrases? Here's an example: Suppose that you create an application in which there's a state that's listening for an end-user to say either "fight" or "bite." No problem right? When an end-user says "fight" she will draw a sword, and when the user says "bite" she shows her teeth. But what if sometimes when the user says "fight", the recognizer thinks it heard "bite"? In this case, we'd call these two phrases "confusable". In the world of speech science, confusable phrases are known as an "in-grammar false accepts", but here, we'll just refer to them as confusable phrases.

As a developer, you want to know before you ship your application whether you've created a grammar, or a set of simultaneously active grammars, that contains phrases which have a risk of being confused with other phrases in the grammar(s). And that's where Confusability.exe can help. If confusable phrases are detected, you may choose to alter your design or to invest more time in testing the performance of the two phrases in your application.

The tool may detect the following types of confusable phrases:

Homonyms (for example "fly it" and "flight"; "bare" and "bear")

Near homonyms or rhyming phrases (for example "bag it" and "tag it")

Unintuitive confusable phrases that the recognizer identifies as having overlapping acoustic similarities (for example "stop" and "up")

Although the tool will detect the potential for confusability in your grammars, there are some caveats to understanding its use:

The tool is not a substitute for collecting end-user speech in a test production environment. The best insight into how speech and grammars perform will always be gained through their interaction with speech from end-users of your application.

The tool's results are not always intuitive and may not flag phrases that we, as native speakers, think are confusable. That is, just because two phrases rhyme to you and me, it does not mean they will be flagged by the tool.

Similarly, just because the tool doesn't flag terms as confusable does not mean that some end-users won't experience two phrases as being confusable under some conditions. Again, there are many factors in a production environment such as noise and speaker variation that can ultimately affect confusability.

The results may differ based on which speech recognition engine you use, or on its version. For example, you might find confusable results with one recognizer but not another. Also, if you use the same recognizer that you used for a previous analysis, but there have been changes or updates to the recognizer and its components (such as its acoustic model), then this may produce a different result when run against the same grammar.

However, despite the above mentioned caveats, Confusability.exe can be a helpful tool in the grammar development workflow. The tool allows you to increment the performance of your grammar as a step toward making it work as expected.

Part II: "When should I use the tool?"

Part II: "When should I use the tool?"

Use the Confusability.exe tool early in the development process, after you have a functional grammar, but before you've gone to the trouble of setting up a test production environment. The tool provides a sanity-check-level validation of the design of a grammar or an application state, answering questions such as:

Did I inadvertently create a state in the application that has multiple instructions to listen for the same phrase? For example, you may have accidentally put the same phrase in two different grammars or rules which could result in unexpected application behavior depending on whether their semantics are different.

Are there unintuitive phrases that could lead to users saying one thing, and the recognizer creating a match to a different phrase in the grammar?

In addition, you may run the tool on grammars following a major update or change to the recognition engine and its environment.

Part III: "Ok, now how do I use it?"

Part III: "Ok, now how do I use it?"

To get started, let's assume that you have your developer environment set up with the following components:

You've installed the Microsoft Speech Platform SDK 11.

You've configured the grammar development tools to target the recognizer that your application will use.

You've authored a functional grammar that you want to analyze for confusability.

From the command line, you run the tool as follows:

Copy Code Copy Code | |

|---|---|

> Confusability.exe -In <GrammarFileName > -Out <OutputFilename> -RecoConfig <RecognizerConfigurationFilename> | |

Note that there are additional options, but for a basic scenario with a single simple grammar we just need these three parameters. Also, if you've set up the tools to work with a recognizer via the "RecoConfig.xml" file (step 2), you don't need to specify the RecoConfig parameter at the command line as the tool will look for "RecoConfig.xml" if not specified. See Setting Up the Grammar Development Tools.

Note Note |

|---|

The tool can take several minutes to process a grammar that contains up to 100 phrases, depending on your machine's hardware. Larger grammars will take longer. |

An intuitive example: Dog Actions

Imagine that we have an application in which the end-user is controlling a dog avatar using her voice. After consideration of the application's design, we've arrived at a single grammar that maps five different phrases to five different actions. Our grammar is as follows:

| XML |  Copy Code Copy Code |

|---|---|

<?xml version="1.0" encoding="utf-8"?>

<!-- Input to Confusability.exe: "DogControl.grxml" -->

<grammarmode="voice"

root="DOG_ACTIONS"

tag-format="semantics/1.0-literals"

version="1.0" xml:lang="en-US"

xmlns="http://www.w3.org/2001/06/grammar">

<rule id="DOG_ACTIONS" scope="public">

<one-of>

<item>

Walk

<tag> walk </tag>

</item>

<item>

Run

<tag> run </tag>

</item>

<item>

Lay down

<tag> lay down </tag>

</item>

<item>

<one-of>

<item> Bite </item>

<item> Snap </item>

</one-of>

<tag> bite </tag>

</item>

<item>

Fight

<tag> fight </tag>

</item>

</one-of>

</rule>

</grammar> | |

To analyze the DogControl.grxml grammar for confusable phrases, you enter the following on the command line:

Copy Code Copy Code | |

|---|---|

> Confusability.exe -In DogControl.grxml -Out DogControl.grxml.confusability.xml -RecoConfig RecoConfig.xml | |

The tool will output the following to the console:

Info: Successfully output 6 phrases

Info: 6 phrases analyzed.

Warning: 2 phrases found with confusability greater than zero.

Info: Successfully output details to "DogControl.grxml.confusability.out.xml"

Processing Complete: 0 Error(s). 1 Warning(s)

The Warning is the key here, indicating that there are two phrases with detected risk for confusability. Opening the output file (DogControl.grxml.confusability.out.xml) reveals the following:

Copy Code Copy Code | |

|---|---|

<?xml version="1.0" encoding="utf-8"?>

<Scenario xml:space="preserve">

<Utterances>

<Utterance id="1">

<TranscriptText>Fight</TranscriptText>

<TranscriptSemantics>fight</TranscriptSemantics>

<TranscriptPronunciation>F AI T</TranscriptPronunciation>

<RecoResultText>Bite</RecoResultText>

<RecoResultSemantics>bite</RecoResultSemantics>

<RecoResultRuleTree>

<Rule id="DOG_ACTIONS" uri="file:///C:/Tools/DogControl.grxml">Bite</Rule>

</RecoResultRuleTree>

<RecoResultPronunciation>B AI T</RecoResultPronunciation>

<RecoResultConfidence>0.1304087</RecoResultConfidence>

</Utterance>

<Utterance id="2">

<TranscriptText>Bite</TranscriptText>

<TranscriptSemantics>bite</TranscriptSemantics>

<TranscriptPronunciation>B AI T</TranscriptPronunciation>

<RecoResultText>Fight</RecoResultText>

<RecoResultSemantics>fight</RecoResultSemantics>

<RecoResultRuleTree>

<Rule id="DOG_ACTIONS" uri="file:///C:/Tools/DogControl.grxml">Fight</Rule>

</RecoResultRuleTree>

<RecoResultPronunciation>F AI T</RecoResultPronunciation>

<RecoResultConfidence>0.5065575</RecoResultConfidence>

</Utterance>

</Utterances>

</Scenario> | |

For each Utterance element, we can compare the TranscriptText element (the expected recognition result) with the RecoResultText element (the actual recognition result), indicating that "Fight" and "Bite" are at risk for confusion. This does not mean that users who say "Fight" will always be recognized as having said "Bite", but it does mean that there is a possibility of these phrases being misrecognized for each other.

The tool provides a metric that quantifies the degree of this risk via the RecoResultConfidence element. If you have a set of results with many possible confusions, you should pay more attention to phrases with values closer to "1" in the RecoResultConfidence element, and consider changing those phrases. At a higher level, the takeaway is that both phrases have a predicted risk of being confusable for some users some of the time.

Note Note |

|---|

The value for RecoResultConfidence in the output of the Confusability tool is not related to the confidence score that the speech recognition engine assigns to recognition results. The Confusability tool does not take into account the confidencelevel setting of the speech recognition engine or the confidence score that it assigns to individual recognition results. It creates an independent metric that estimates the risk of confusability. |

In-depth detail: Why "Bite" is more confusable with "Fight" than the other way around

You may also be asking why the results predict "Bite" to be more confusable with "Fight" than the other way around. While "Fight" and "Bite" may be similar sounding to human ears, a speech recognizer has different sensitivity to speech sounds.

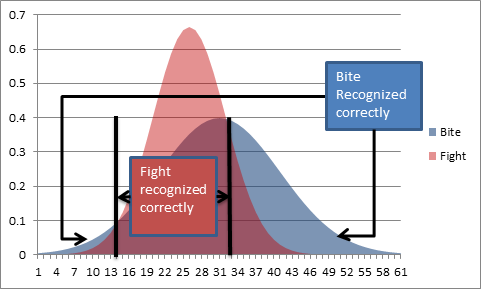

To explain the difference, the following diagram provides a hypothetical schema representing each phrase's acoustic representation in a normalized distribution. Note that the Confusability tool does not generate diagrams.

Figure 1: The Inequality of Risk for Confusable Phrases

The above graph visually describes why the tool can predict that two phrases will be confusable, but not at an equal level of risk. For example, with the "Bite" vs. "Fight" phrases - we see an overlap in the features representing both phrases.

Because "Fight" has a smaller variance in its distribution, it has a greater chance of being recognized for itself when spoken. "Bite", on the other hand, tends to have an acoustic representation with a lot of variance. This means that when "Bite" is spoken it has a higher chance of being mistaken for "Fight".

Part IV: "How do I apply the results of Confusability analysis?"

Part IV: "How do I apply the results of Confusability analysis?"

Based on the tool results, there are several actions that you can take:

Consider altering the grammar design to mitigate the risk of confusion. For one of the confusable pairs, choose a different phrase that means the same thing. For example, in the DogControl.grxml grammar, consider changing "Fight" to "Attack". Doing so would result in zero predicted confusable phrases when you re-run the grammar back through the tool. This approach is recommended if you do not have the time and resources to do adequate utterance collection for tuning, or if modifying the phrases doesn't degrade the end-user experience (it's just as good saying "Attack" instead of "Fight").

If you prefer to not change your design, then you should consider spending some extra attention on end-user testing. Do users experience a high number of false accepts (confusion) when saying those phrases in a test production environment? If not, then the production environment doesn't lend itself to a high risk of confusion for those phrases and you can feel more confident that game-play will result in a similar experience. However, if users in a test production environment encounter an unacceptable number of false accepts then you can:

Reconsider a design change to the grammar now that you have data from end-users that supports the results given by the tool.

Consider a fall-back in the game-play where a false accept can be mitigated through additional logic. For example, you may want to prompt the user to confirm her input when she speaks a confusable phrase, or give the user an opportunity to cancel a request before it's executed.

And of course, you always have the option of doing nothing. You may be comfortable with this option if you are familiar with how your platform works with speech in production and how it tends to behave for your user segment.

Summary

Summary

Confusability.exe provides a sanity-check-level analysis for phrases in a grammar that have a potential to be misrecognized for one another.

Reminders and guidance:

Use the tool after the design process but before you go out and collect lots of speaker utterances.

You should not equate the metric returned directly with probability, but rather indicate which pairs of phrases have some risk of confusion.

If you see confusion in one direction, assume potential confusion in both directions. For example, your use of the tool might reveal that "foo bar" is confusable with "too far" but not vice-versa. For the sake of action items, assume that there's a risk that "too far" may be misrecognized as "foo bar."

Patterns more likely to be flagged by the tool include:

Near homonyms in which phrases differ by one or a few sounds (for example, "tog" and "pog").

Homonyms in which phrases are duplicated (for example, "cake" and "cake").

Phrase length, when equal, can increase the likelihood of a confusable pair; however, shorter phrases also tend to be more likely flagged as confusable than longer phrases

The tool is not a substitute for testing with actual speech from end-users, which provides the most accurate insight into how a grammar affects the speech recognition experience. But in reality, collecting lots of user data may not be feasible or practical for all applications. Running Confusability.exe before pre-production testing of speech in the application can help identify some potentially confusable phrases.